#Kernel-based Virtual Machine

Explore tagged Tumblr posts

Text

DISCOVER THE NEW SPEEDYKVM: UNLEASHING POWER AND PERFORMANCE

Discover SpeedyKVM's new site! Enhanced KVM, BareMetal, Colocation, NVMe storage, 10GB uplink across 6 locations. Unleash robust performance!

Welcome to the revamped SpeedyKVM website, where cutting-edge technology meets robust performance! As a leader in Kernel-based Virtual Machine (KVM) hosting, SpeedyKVM now offers an enhanced suite of services designed to meet the demanding needs of businesses and developers alike.

Powerful KVM Services

SpeedyKVM’s KVM (Kernel-based Virtual Machine) services have received a substantial upgrade. With advanced hardware specs and optimized performance, users can now enjoy faster, more reliable, and more efficient virtual servers. Whether you need a powerful KVM server for hosting applications, databases, or websites, our improved infrastructure ensures top-tier performance and uptime.

Enhanced Services for Superior Performance

SpeedyKVM has taken its service offerings to the next level with the inclusion of NVMe storage and 10GB uplinks in their plans. NVMe (Non-Volatile Memory Express) storage provides significantly faster data transfer rates and improved performance compared to traditional SSDs, making your virtual servers more responsive and efficient. The 10GB uplink ensures ultra-fast and reliable internet connectivity, which is crucial for businesses requiring high-speed data transmission and low latency.

Diverse Services for All Needs

In addition to our KVM offerings, we now provide a range of enhanced services. Our BareMetal Servers offer dedicated, uncompromised power for mission-critical applications. For businesses with specific data storage and security needs, SpeedyKVM’s Colocation services offer secure and scalable solutions.

Additional Services and Comprehensive Support

SpeedyKVM’s additional services include SSL certificates, domain services, and WHMCS. All of our solutions are paired with 24/7 support, ensuring that every user’s needs are met with professionalism and expertise. Our user-friendly website and support portal makes managing your services a breeze, whether you're a seasoned IT professional or a newcomer to VPS hosting.

Experience the future of KVM hosting with SpeedyKVM’s powerful, reliable, and globally connected services. Visit the new SpeedyKVM website now and take your online presence to the next level!

#SpeedyKVM#KVM hosting#KVM services#BareMetal Servers#Colocation#NVMe storage#10GB uplink#advanced infrastructure#virtual servers#Kernel-based Virtual Machine

0 notes

Text

On Not Knowing Where to Start

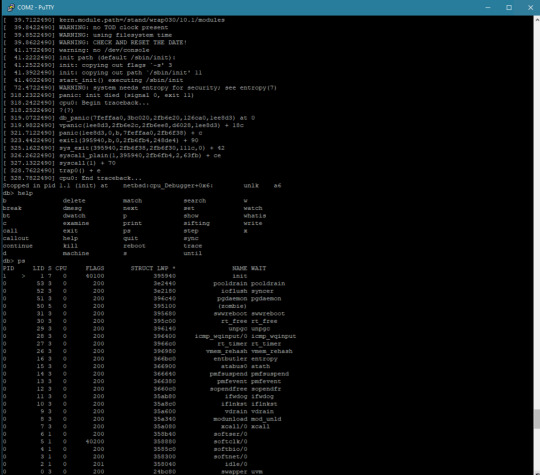

I have made tremendous progress on getting NetBSD to run on Wrap030, my 68030 homebrew computer.

I learned I had completely misunderstood how NetBSD sets up hardware devices (the tag is just a machine-dependent structure to help identify the bus properties the device is attaching to, and the handle is the actual device base address).

I learned how to set up my timer interrupt for use by the kernel for delay() statements & the like, which turned out to be a missing piece in getting serial devices set up (since the driver calls delay() at several points).

I got stuck for days trying to understand why the kernel couldn't mount my disk (endian-swapping strikes again).

Then finally, the kernel prompted me to enter the path for init, the "mother of all processes" as the code calls it.

... which promptly crashed.

and it crashed again. and again. and again.

I am finally out of the kernel. I have reached process 1. The machine is officially running userland code.

And I am completely lost.

I've spent weeks learning how to customize, build, and troubleshoot the kernel; but I am no longer dealing with the kernel.

I caught some bugs in how I was setting up virtual memory space for user processes (invalid stack address that overlapped I/O space). I swapped out some suspicious RAM. I found that the kernel would die in unexpected ways if it got too large (still don't know the cause, but it seems fine if I just keep it small). Still, init just dies with no insight into what it's doing or how far it's getting into its process.

But I'm in unknown territory here, and I'm finding myself quite lost and unsure where to turn next. I'm sure I'll figure out though. I just have to keep hammering away at it. I've come too far to give up now.

I have a looming deadline I've set for myself. VCFSW 2025 is in just a few weeks and I would love to have this system running in time for the show. It's a long shot — this project is something I've wanted to do for 25 years, and something I've been working towards for 10. I remain cautiously optimistic, knowing full well the scale of what remains.

But look how far I've come.

#mc68030#motorola 68k#motorola 68030#debugging#vcfsw#wrap030#retrotech#vcf#homebrew computer#homebrew computing#retro computing#netbsd#unix#Retrocomputing

17 notes

·

View notes

Text

Guía sobre Red Hat Enterprise Linux: La Solución Empresarial en Linux

1. Introducción

Presentación de Red Hat Enterprise Linux (RHEL)

Red Hat Enterprise Linux (RHEL) es una distribución de Linux de clase empresarial desarrollada por Red Hat, Inc. Lanzada en 2000, RHEL está diseñada para ser una solución robusta y confiable para entornos empresariales y de misión crítica.

Importancia de RHEL en el ecosistema Linux

RHEL es ampliamente utilizado en servidores y entornos de nube debido a su estabilidad, soporte técnico y la capacidad de integrarse con una amplia gama de tecnologías empresariales. Es conocida por su enfoque en la seguridad y la escalabilidad.

2. Historia y Filosofía de Red Hat Enterprise Linux

Origen y evolución de RHEL

RHEL se basa en la distribución comunitaria Fedora y fue creado para ofrecer una versión comercial y soportada de Linux. Red Hat ha lanzado versiones de RHEL con ciclos de vida extensos, lo que garantiza soporte a largo plazo para empresas.

Filosofía de RHEL y el software libre

RHEL sigue la filosofía del software libre y open source, pero ofrece soporte comercial, lo que incluye asistencia técnica, actualizaciones y servicios adicionales. Esto proporciona a las empresas la confianza de contar con respaldo profesional.

3. Características Clave de Red Hat Enterprise Linux

Soporte a largo plazo y estabilidad

RHEL ofrece soporte extendido para cada versión, con actualizaciones de seguridad y mantenimiento durante 10 años. Esto es crucial para entornos empresariales que requieren estabilidad y confiabilidad a largo plazo.

Gestor de paquetes

YUM (Yellowdog Updater, Modified) fue el gestor de paquetes tradicional de RHEL, pero ha sido reemplazado por DNF (Dandified YUM) en versiones más recientes. DNF mejora la gestión de paquetes y la resolución de dependencias.

Comandos básicos: sudo dnf install [paquete], sudo dnf remove [paquete], sudo dnf update.

Formatos de paquetes soportados

RHEL utiliza varios formatos de paquetes:

.rpm: El formato de paquetes nativo de Red Hat y sus derivados.

.deb: Aunque no es nativo, puede instalarse en sistemas basados en RHEL con herramientas específicas.

Soporte para entornos virtuales y en la nube

RHEL ofrece herramientas y soporte para la virtualización, incluyendo KVM (Kernel-based Virtual Machine), y es compatible con plataformas de nube como AWS, Azure y OpenStack.

4. Proceso de Instalación de Red Hat Enterprise Linux

Requisitos mínimos del sistema

Procesador: 1 GHz o superior.

Memoria RAM: 1 GB como mínimo, 2 GB o más recomendados.

Espacio en disco: 10 GB de espacio libre en disco.

Tarjeta gráfica: Soporte para una resolución mínima de 1024x768.

Unidad de DVD o puerto USB para la instalación.

Descarga y preparación del medio de instalación

La descarga de RHEL requiere una suscripción activa con Red Hat. Se puede preparar un USB booteable usando herramientas como Rufus o balenaEtcher.

Guía paso a paso para la instalación

Selección del entorno de instalación: El instalador gráfico de RHEL, basado en Anaconda, ofrece una interfaz intuitiva para la instalación.

Configuración de particiones: El instalador permite particionado automático y manual, adaptándose a diferentes configuraciones de almacenamiento.

Configuración de la red y selección de software: Durante la instalación, se configuran las opciones de red y se pueden elegir paquetes y características adicionales.

Primeros pasos post-instalación

Actualización del sistema: Ejecutar sudo dnf update después de la instalación garantiza que todo el software esté actualizado.

Instalación de controladores adicionales y software: RHEL puede instalar automáticamente controladores adicionales necesarios para el hardware.

5. Comparativa de RHEL con Otras Distribuciones

RHEL vs. CentOS

Objetivo: CentOS era una versión gratuita y sin soporte de RHEL, pero ha sido reemplazado por CentOS Stream, que ofrece una vista previa de la próxima versión de RHEL. RHEL proporciona soporte técnico y actualizaciones extendidas, mientras que CentOS Stream actúa como un puente entre Fedora y RHEL.

Filosofía: RHEL se centra en el soporte empresarial y en la estabilidad, mientras que CentOS Stream se enfoca en el desarrollo y la contribución de la comunidad.

RHEL vs. Ubuntu Server

Objetivo: Ubuntu Server está diseñado para ser fácil de usar y de administrar, con un ciclo de lanzamientos regular. RHEL, por su parte, se enfoca en ofrecer soporte a largo plazo y en satisfacer las necesidades de grandes empresas.

Filosofía: Ubuntu Server ofrece versiones LTS para estabilidad, mientras que RHEL proporciona soporte y servicios comerciales extensivos.

RHEL vs. SUSE Linux Enterprise Server (SLES)

Objetivo: SLES, desarrollado por SUSE, es similar a RHEL en términos de soporte empresarial y estabilidad. Ambas distribuciones ofrecen soporte extendido y herramientas para la gestión de servidores.

Filosofía: RHEL y SLES son comparables en términos de soporte empresarial, pero cada uno tiene su propio enfoque y conjunto de herramientas específicas.

6. Herramientas y Servicios de Red Hat

Red Hat Satellite

Red Hat Satellite es una solución de gestión de sistemas que permite la administración centralizada de sistemas RHEL, incluyendo la implementación, configuración y mantenimiento.

Red Hat Ansible Automation

Ansible Automation es una herramienta para la automatización de tareas y la gestión de configuraciones, facilitando la administración de sistemas a gran escala.

Red Hat OpenShift

OpenShift es una plataforma de contenedores y Kubernetes gestionada por Red Hat, ideal para la implementación y gestión de aplicaciones en contenedores.

7. Comunidad y Soporte

Acceso al soporte de Red Hat

Red Hat ofrece soporte técnico profesional a través de sus suscripciones, que incluyen asistencia técnica 24/7, actualizaciones de seguridad y parches.

Recursos comunitarios y documentación

Red Hat Customer Portal: Acceso a documentación, guías de usuario, y foros de soporte.

Red Hat Learning Subscription: Cursos y formación en línea para usuarios de RHEL.

8. Conclusión

RHEL como una opción robusta para empresas

Red Hat Enterprise Linux es una opción sólida para empresas que buscan una distribución de Linux con soporte técnico profesional, estabilidad y escalabilidad. Su enfoque en la estabilidad y el soporte extendido lo convierte en una opción ideal para entornos empresariales y de misión crítica.

Recomendaciones finales para quienes consideran usar RHEL

RHEL es adecuado para organizaciones que requieren soporte técnico y estabilidad a largo plazo, y que están dispuestas a invertir en una solución de Linux empresarial respaldada por Red Hat.

9. Preguntas Frecuentes (FAQ)

¿RHEL es adecuado para pequeñas empresas?

RHEL es ideal para empresas de todos tamaños que buscan un sistema operativo empresarial robusto y con soporte técnico profesional.

¿Qué diferencia a RHEL de CentOS?

CentOS era una versión gratuita y comunitaria de RHEL, pero ahora CentOS Stream sirve como una vista previa de las próximas versiones de RHEL. RHEL proporciona soporte empresarial y actualizaciones extendidas.

¿Cómo obtengo soporte para RHEL?

El soporte se obtiene a través de una suscripción con Red Hat, que incluye asistencia técnica, actualizaciones y acceso a herramientas de gestión.

¿RHEL es compatible con software de terceros?

Sí, RHEL es compatible con una amplia gama de software y aplicaciones de terceros, y Red Hat ofrece soporte para integrar soluciones empresariales.

#Red Hat Enterprise Linux#RHEL#distribución Linux empresarial#Linux#gestor de paquetes#DNF#RPM#instalación RHEL#soporte técnico RHEL#Red Hat Satellite#Red Hat Ansible#Red Hat OpenShift#comparación RHEL#RHEL vs CentOS#RHEL vs Ubuntu Server#RHEL vs SLES#comunidad RHEL#recursos RHEL#actualizaciones RHEL

3 notes

·

View notes

Text

Unleashing Efficiency: Containerization with Docker

Introduction: In the fast-paced world of modern IT, agility and efficiency reign supreme. Enter Docker - a revolutionary tool that has transformed the way applications are developed, deployed, and managed. Containerization with Docker has become a cornerstone of contemporary software development, offering unparalleled flexibility, scalability, and portability. In this blog, we'll explore the fundamentals of Docker containerization, its benefits, and practical insights into leveraging Docker for streamlining your development workflow.

Understanding Docker Containerization: At its core, Docker is an open-source platform that enables developers to package applications and their dependencies into lightweight, self-contained units known as containers. Unlike traditional virtualization, where each application runs on its own guest operating system, Docker containers share the host operating system's kernel, resulting in significant resource savings and improved performance.

Key Benefits of Docker Containerization:

Portability: Docker containers encapsulate the application code, runtime, libraries, and dependencies, making them portable across different environments, from development to production.

Isolation: Containers provide a high degree of isolation, ensuring that applications run independently of each other without interference, thus enhancing security and stability.

Scalability: Docker's architecture facilitates effortless scaling by allowing applications to be deployed and replicated across multiple containers, enabling seamless horizontal scaling as demand fluctuates.

Consistency: With Docker, developers can create standardized environments using Dockerfiles and Docker Compose, ensuring consistency between development, testing, and production environments.

Speed: Docker accelerates the development lifecycle by reducing the time spent on setting up development environments, debugging compatibility issues, and deploying applications.

Getting Started with Docker: To embark on your Docker journey, begin by installing Docker Desktop or Docker Engine on your development machine. Docker Desktop provides a user-friendly interface for managing containers, while Docker Engine offers a command-line interface for advanced users.

Once Docker is installed, you can start building and running containers using Docker's command-line interface (CLI). The basic workflow involves:

Writing a Dockerfile: A text file that contains instructions for building a Docker image, specifying the base image, dependencies, environment variables, and commands to run.

Building Docker Images: Use the docker build command to build a Docker image from the Dockerfile.

Running Containers: Utilize the docker run command to create and run containers based on the Docker images.

Managing Containers: Docker provides a range of commands for managing containers, including starting, stopping, restarting, and removing containers.

Best Practices for Docker Containerization: To maximize the benefits of Docker containerization, consider the following best practices:

Keep Containers Lightweight: Minimize the size of Docker images by removing unnecessary dependencies and optimizing Dockerfiles.

Use Multi-Stage Builds: Employ multi-stage builds to reduce the size of Docker images and improve build times.

Utilize Docker Compose: Docker Compose simplifies the management of multi-container applications by defining them in a single YAML file.

Implement Health Checks: Define health checks in Dockerfiles to ensure that containers are functioning correctly and automatically restart them if they fail.

Secure Containers: Follow security best practices, such as running containers with non-root users, limiting container privileges, and regularly updating base images to patch vulnerabilities.

Conclusion: Docker containerization has revolutionized the way applications are developed, deployed, and managed, offering unparalleled agility, efficiency, and scalability. By embracing Docker, developers can streamline their development workflow, accelerate the deployment process, and improve the consistency and reliability of their applications. Whether you're a seasoned developer or just getting started, Docker opens up a world of possibilities, empowering you to build and deploy applications with ease in today's fast-paced digital landscape.

For more details visit www.qcsdclabs.com

#redhat#linux#docker#aws#agile#agiledevelopment#container#redhatcourses#information technology#ContainerSecurity#ContainerDeployment#DockerSwarm#Kubernetes#ContainerOrchestration#DevOps

5 notes

·

View notes

Text

Native Spectre v2 Exploit (CVE-2024-2201) Found Targeting Linux Kernel on Intel Systems

Cybersecurity researchers have unveiled what they claim to be the "first native Spectre v2 exploit" against the Linux kernel on Intel systems, potentially enabling the leakage of sensitive data from memory. The exploit, dubbed Native Branch History Injection (BHI), can be used to extract arbitrary kernel memory at a rate of 3.5 kB/sec by circumventing existing Spectre v2/BHI mitigations, according to researchers from the Systems and Network Security Group (VUSec) at Vrije Universiteit Amsterdam. The vulnerability tracked as CVE-2024-2201, was first disclosed by VUSec in March 2022, describing a technique that can bypass Spectre v2 protections in modern processors from Intel, AMD, and Arm. https://www.youtube.com/watch?v=24HcE1rDMdo While the attack leveraged extended Berkeley Packet Filters (eBPFs), Intel's recommendations to address the issue included disabling Linux's unprivileged eBPFs. However, the new Native BHI exploit neutralizes this countermeasure by demonstrating that BHI is possible without eBPF, affecting all Intel systems susceptible to the vulnerability. The CERT Coordination Center (CERT/CC) warned that existing mitigation techniques, such as disabling privileged eBPF and enabling (Fine)IBT, are insufficient in stopping BHI exploitation against the kernel/hypervisor. "An unauthenticated attacker can exploit this vulnerability to leak privileged memory from the CPU by speculatively jumping to a chosen gadget," the advisory stated. The disclosure comes weeks after researchers detailed GhostRace (CVE-2024-2193), a variant of Spectre v1 that combines speculative execution and race conditions to leak data from contemporary CPU architectures. It also follows new research from ETH Zurich that unveiled a family of attacks, dubbed Ahoi Attacks, that could compromise hardware-based trusted execution environments (TEEs) and break confidential virtual machines (CVMs) like AMD Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP) and Intel Trust Domain Extensions (TDX). In response to the Ahoi Attacks findings, AMD acknowledged the vulnerability is rooted in the Linux kernel implementation of SEV-SNP and stated that fixes addressing some of the issues have been upstreamed to the main Linux kernel. Read the full article

2 notes

·

View notes

Text

In this blog post, we’ll walk through how to install KVM on Debian 12 and, if you ever need to, cleanly remove it from your system.

0 notes

Text

XboxOS is huge even if you only play games on a Desktop. Here's why.

XboxOS is huge even if you only play games on a Desktop. Here's why. A quick info dump on why SteamOS is so good currently: It's drops everything to run games.That's it. That's the secret.SteamOS is just a pre-packaged Arch Linux that launches into a GameScope session instead of the Desktop and runs next-to-nothing in the background when in that GameScope session.Now, I want you to ask yourself: What if Windows could do that?What if Windows could launch a Console-style UI that is self-contained and completely alone with no processes like OneDrive, Office, or Copilot to eat performance.What if I told you it's already possible because of Xbox Consoles?Xbox consoles run just like SteamOS, but with Windows as a base. They use a system called "Hyperland" to give each game their own self-contained Virtual Machine. This means no Kernel Level anticheat. This means no background processes to eat at performance. This is also how Quick Resume works. You're just saving the state of the Hyperland VM for that game and recalling it when you start it back up.I genuinely believe the new Xbox handhelds are just running Windows with a custom Hyperland session. Windows kills itself in favor of this Hyperland session, and the games launch in their own sessions.This is huge, and as a Linux fanboy I'm very, very excited.To think that we're getting what is basically SteamOS's performance and security with Windows compatibility is a massive win. Submitted June 08, 2025 at 09:02PM by Kragwulf https://ift.tt/y2qU7ob via /r/gaming

0 notes

Text

Docker and Containerization in Cloud Native Development

In the world of cloud native application development, the demand for speed, agility, and scalability has never been higher. Businesses strive to deliver software faster while maintaining performance, reliability, and security. One of the key technologies enabling this transformation is Docker—a powerful tool that uses containerization to simplify and streamline the development and deployment of applications.

Containers, especially when managed with Docker, have become fundamental to how modern applications are built and operated in cloud environments. They encapsulate everything an application needs to run—code, dependencies, libraries, and configuration—into lightweight, portable units. This approach has revolutionized the software lifecycle from development to production.

What Is Docker and Why Does It Matter?

Docker is an open-source platform that automates the deployment of applications inside software containers. Containers offer a more consistent and efficient way to manage software, allowing developers to build once and run anywhere—without worrying about environmental inconsistencies.

Before Docker, developers often faced the notorious "it works on my machine" issue. With Docker, you can run the same containerized app in development, testing, and production environments without modification. This consistency dramatically reduces bugs and deployment failures.

Benefits of Docker in Cloud Native Development

Docker plays a vital role in cloud native environments by promoting the principles of scalability, automation, and microservices-based architecture. Here’s how it contributes:

1. Portability and Consistency

Since containers include everything needed to run an app, they can move between cloud providers or on-prem systems without changes. Whether you're using AWS, Azure, GCP, or a private cloud, Docker provides a seamless deployment experience.

2. Resource Efficiency

Containers are lightweight and share the host system’s kernel, making them more efficient than virtual machines (VMs). You can run more containers on the same hardware, reducing costs and resource usage.

3. Rapid Deployment and Rollback

Docker enables faster application deployment through pre-configured images and automated CI/CD pipelines. If a new deployment fails, you can quickly roll back to a previous version by using container snapshots.

4. Isolation and Security

Each Docker container runs in isolation, ensuring that applications do not interfere with one another. This isolation also enhances security, as vulnerabilities in one container do not affect others on the same host.

5. Support for Microservices

Microservices architecture is a key component of cloud native application development. Docker supports this approach by enabling the development of loosely coupled services that can scale independently and communicate via APIs.

Docker Compose and Orchestration Tools

Docker alone is powerful, but in larger cloud native environments, you need tools to manage multiple containers and services. Docker Compose allows developers to define and manage multi-container applications using a single YAML file. For production-scale orchestration, Kubernetes takes over, managing deployment, scaling, and health of containers.

Docker integrates well with Kubernetes, providing a robust foundation for deploying and managing microservices-based applications at scale.

Real-World Use Cases of Docker in the Cloud

Many organizations already use Docker to power their digital transformation. For instance:

Netflix uses containerization to manage thousands of microservices that stream content globally.

Spotify runs its music streaming services in containers for consistent performance.

Airbnb speeds up development and testing by running staging environments in isolated containers.

These examples show how Docker not only supports large-scale operations but also enhances agility in cloud-based software development.

Best Practices for Using Docker in Cloud Native Environments

To make the most of Docker in your cloud native journey, consider these best practices:

Use minimal base images (like Alpine) to reduce attack surfaces and improve performance.

Keep containers stateless and use external services for data storage to support scalability.

Implement proper logging and monitoring to ensure container health and diagnose issues.

Use multi-stage builds to keep images clean and optimized for production.

Automate container updates using CI/CD tools for faster iteration and delivery.

These practices help maintain a secure, maintainable, and scalable cloud native architecture.

Challenges and Considerations

Despite its many advantages, Docker does come with challenges. Managing networking between containers, securing images, and handling persistent storage can be complex. However, with the right tools and strategies, these issues can be managed effectively.

Cloud providers now offer native services—like AWS ECS, Azure Container Instances, and Google Cloud Run—that simplify the management of containerized workloads, making Docker even more accessible for development teams.

Conclusion

Docker has become an essential part of cloud native application development by making it easier to build, deploy, and manage modern applications. Its simplicity, consistency, and compatibility with orchestration tools like Kubernetes make it a cornerstone technology for businesses embracing the cloud.

As organizations continue to evolve their software strategies, Docker will remain a key enabler—powering faster releases, better scalability, and more resilient applications in the cloud era.

#CloudNative#Docker#Containers#DevOps#Kubernetes#Microservices#CloudComputing#CloudDevelopment#SoftwareEngineering#ModernApps#CloudZone#CloudArchitecture

0 notes

Text

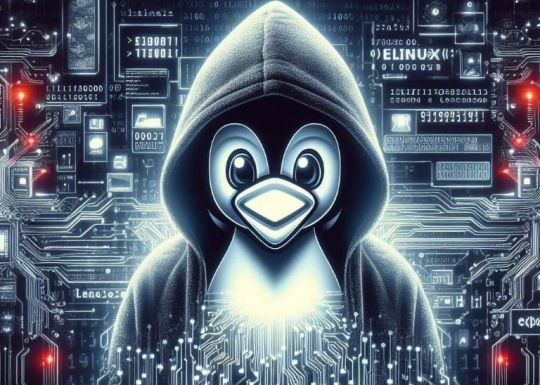

Why Choose Red Hat for Virtualization?

In today’s fast-paced digital landscape, virtualization is more than just a technology — it’s a strategic enabler for agility, scalability, and cost-efficiency. When selecting a virtualization platform, organizations need a solution that is reliable, flexible, and future-proof. This is where Red Hat stands out.

Red Hat brings together trusted products, a vibrant partner ecosystem, and deep open source expertise, offering a comprehensive virtualization solution designed not only to migrate your virtual machines (VMs) today but also to modernize your infrastructure for tomorrow.

Key Reasons to Choose Red Hat for Virtualization

1. Open Source Foundation & Flexibility

Red Hat Virtualization (RHV) is built on open standards and the powerful KVM (Kernel-based Virtual Machine) hypervisor, providing high performance with the freedom to avoid vendor lock-in. This open architecture allows you to integrate with existing tools and adapt to changing business needs easily.

2. Enterprise-Grade Reliability & Performance

With years of experience in enterprise Linux and open source software, Red Hat delivers a virtualization platform that’s robust, secure, and optimized for mission-critical workloads. Red Hat’s commitment to rigorous testing and support ensures uptime and stability.

3. Comprehensive Ecosystem & Integration

Red Hat’s virtualization integrates seamlessly with Red Hat OpenShift for container orchestration, Red Hat Ansible for automation, and other solutions within the Red Hat ecosystem. This synergy helps organizations gradually transition from traditional VMs to modern cloud-native applications.

4. Cost Efficiency & Simplified Management

Red Hat Virtualization provides a unified management interface, allowing IT teams to efficiently manage virtual environments, reduce operational complexity, and lower total cost of ownership (TCO). Its open-source nature also means you can avoid expensive licensing fees tied to proprietary solutions.

5. Future-Ready for Modernization

Starting with VM migration is just the beginning. Red Hat supports hybrid cloud strategies and offers tools to containerize workloads when you’re ready to modernize, giving your organization a clear path to digital transformation.

How Red Hat Virtualization Works: A Simplified Workflow

Here’s a basic workflow of migrating and managing your VMs with Red Hat Virtualization:

Step 1: Assess and Discover

Evaluate your current VM infrastructure and workloads.

Step 2: Migrate VMs

Use Red Hat’s migration tools to move VMs to Red Hat Virtualization seamlessly, minimizing downtime.

Step 3: Manage and Optimize

Leverage Red Hat’s management platform to monitor, optimize, and automate your VM environment.

Step 4: Modernize Workloads

When ready, modernize your infrastructure by containerizing applications with OpenShift and automating operations using Ansible.

Conclusion

Choosing Red Hat for virtualization means investing in a solution that combines the power of open source with enterprise-grade reliability and future-ready innovation. Whether you’re migrating existing virtual machines or preparing your infrastructure for cloud-native modernization, Red Hat offers a trusted, flexible platform that scales with your business. For more details - www.hawkstack.com

0 notes

Text

Modern computers for general use have an operating system such as UNIX, Linux, Microsoft Windows, and Mac OS that run other application software or programs. An operating system (OS) manages all input and output functionalities within a computer system. The OS controls different users, networking, printing, and memory and file management by conveying data to the screen, printer, and other hardware devices connected to the computer (Laudon, & Laudon, 1997, p.34). Because of the difference in computer construction, the input, and output commands of each system varies. An OS consists of many compact programs controlled by the core or kernel. The kernel is the smallest unit of an operating system that allows users to access the application programs and other systems of the computer. Besides the kernel, operating systems have additional tools that display programs and manage user interface and utility programs for file management and configuration of the OS. Essentially, most operating systems use multiprogramming schemes that manage different jobs for maximum performance of the computer system. At any given time, the operating system kernel manages many processes including user processes such as applications and system processes, for instance the accounting process. Thus, an OS kernel is responsible for the management of multiple functions such as “inter-process communication, scheduling the processes within the CPU, creating, and deleting processes” (Milenkovic, 1987, p.157). Among the contemporary operating systems is the Linux operating system. The basis of this OS is UNIX standards. It comprises of three major systems of code, the kernel, the system libraries, and the system utilities. It runs most efficiently with PC hardware and is compatible with many computer systems. Linux operating system compared to other operating systems offers multiple choices; it is configurable, reliable, and supports networking and internet use. History of Linux Operating System An operating system (OS) entails a program that controls the hardware and software of a computer system through the control of the computer memory, management of the input and output devices connected to the computer; file management, processing of instructions and facilitating networking (Milenkovic, 1987, p.162). Early computers lacked operating systems for managing software and hardware. By the 1960s, pressured by the need to maximize CPU performance, commercial software vendors including UNIVAC and Control Data Corporation were able to provide tools such as batch processing systems for scheduling, allocating resources and execution of multiple jobs (Weizer, 1981, p.119). The batch systems involved developing ad hoc programs for each model (Silberschatz, Galvin, & Gagne, 2005, p.213). Several key concepts during the 1960s contributed to the development of the modern operating systems. The development of IBM System, that involved a single OS, the OS/360, intended to replace the batch system. Among the important features of OS/360 was its hard disk memory storage device, the DASD, which allowed easy file management. Another major development that contributed to the development of modern operating systems was the construct of time-sharing, which could let several users to have virtual access to the machine, since computer resources were expensive at that time. This led to the development of a time-sharing system, the Multics, which formed the basis for earlier operating systems particularly the UNIX (Ritchie, 1984, p.1577). The early microcomputers lacked the capacity for an elaborate operating system, but CP/M was one notable operating system designed for microcomputers that largely based on creating MS-DOS developed by IBM. In the 1980s, Apple Macintosh Computer developed Mac OS while Microsoft developed the Windows NT in 1999 forming the basis for its subsequent operating systems. Apple released the Mac OS X in 2001, an OS rebuilt on UNIX core. Due to the increasing complexity of the devices incorporated into computers, these devices use embedded OS. Modern OS have Command line interface (CLI) operating systems that operate using the keyboard functionalities for input. Other modern Operating systems use the mouse for input but this is dependent on the CPU system (Laudon, & Laudon, 1997, p.36) However, Linux and BSD operating systems can run all CPUs. From the 1990s, Microsoft Windows and UNIX operating systems such as Linux and Mac OS X have been the choice for personal computers. Linux is a modern operating system first designed in 1991 as a self-contained kernel by Linus Torvalds based on UNIX standards. Its development involved a collaboration of many developers from all over the world who communicated using the internet. The kernel is the major component of Linux operating system, which is compatible with existing UNIX software (Ritchie, 1984, p.1581). The Linux kernel developed in 1991 was compatible with most Intel processors but had limited support for embedded devices. Linux 1.0 version developed in 1994 had improved features including BSD-compatibility, improved file management systems, support for TCP and IP networking programming, and support for SCSI controllers that allowed quick access to the computer disk. In 1995, Linus developed Linux version 1.2 with features compatible with PC while version 2.0 came about in 1996 with two distinctive features; allowed support for microprocessors and support for much architecture such as Alpha port. It also had improved features such as advanced file and memory management and networking. The Linux networking tools bases on BSD code such as Free BSD and allows improved networking compared to earlier versions. The Components of Linux Operating system The basis of the design of Linux Operating system commonly used in servers and PC is UNIX standards. It intended to allow use of a computer system by multiple users, allow multitasking based on the earlier time-sharing configuration, and be portable. The design of UNIX systems has common distinctive concepts; devices that allow communication between multiple users, data storage involve plain text storing and hierarchical system for file management (Silberschatz, Galvin, & Gagne, 2005, p.192). As a result, the Linux file system follows the UNIX networking standards with an aim of improving efficiency and speed of the systems. Linux design is compliant with the SVR4 UNIX semantics and BSD codes and POSIX requirements. The four major components of Linux operating system include programs for system management, user utility programs, compilers, and user processes. The four components make up the system shared libraries that are core to Linux OS kernel. User Processes System management programs User Utility Programs Compilers Shared system Libraries Linux Operating System kernel Kernel modules All UNIX implementations are composed of three main systems of codes: the kernel, the shared system libraries, and the system utilities. Similarly, Linux, which bases on UNIX design principles, has the three code systems. The kernel provides the required operating system abstractions while the kernel code allows the user to access the computer hardware and software. This code together with the OS data structures occur at a single address within the OS. The system libraries on the other hand, provide standard functionalities that allow the interaction between the kernel and the operating system and are independent of the kernel code. The purpose of the system utilities is to perform particular tasks as per the requirements of the various user applications or programs. The kernel modules refer to the functionalities of the kernel code that can be loadable or unloadable independent of the other functionalities of the kernel. They enhance the implementation of a networking protocol, a file system, or any embedded device thus allowing the distribution of file systems or device drivers. The Linux kernel modules allow the setting up of a Linux system with minimal kernel or additional inbuilt device drivers. The Linux module support comprises of three main components: the driver registration, conflict resolution, and module process management, which allow loading of modules into the kernel. Module loading allows the management of the kernel code within the kernel memory and management of the symbols referenced by the kernel modules. The module requester is involved in the management of loading requested and confers with the kernel regarding the status of the loaded module, and unloads it if no longer needed. The driver registration functionality allows kernels to send information to the rest of the kernel regarding the availability of a particular driver. Normally, the kernel maintains registration tables of all available drivers and provides the options for adding or removing particular drivers. Registration tables kept by the kernel include file systems, binary formats, networking protocols and drivers for various devices. Conflict resolution is a mechanism that allows various drivers to possess a given hardware resources and protects one driver from using resources of another driver. Thus, the conflict resolution module allows fair access to hardware resources by all drivers, prevents new device drivers from affecting the existing drivers by ensuring fair access to hardware resources. In Linux operating system, the module process management aids in the separation of the creation processes and the implementation of a new program. It comprises of the fork system call that is responsible for creating a new process and execve call, which is responsible for running a new program. Under Linux, three distinct categories of the module processes exist: the identity of the process, the process environment, and the context. The process identity (PID) specifies the module processes for the OS following a call from a particular application. In Linux, every module process must have a personality identifier compatible with UNIX tools (Silberschatz, Galvin, & Gagne, 2005, p.209). The process environment on the other hand consists of argument and environment vectors that allow the customization of the OS based on the module process. The process context comprises of the scheduling context, the file table such as the I/O system, the virtual memory context, and the signal handler table. The process context informs the user of the state of a program at any given time during operation of the system. The use of signals, limited in number, enhance the achievement of the inter-process communication in Linux. However, the processes running within the kernel mode do not involve the use of these signals but instead involve scheduling states and wait.queque structures. The shared memory feature in Linux operating systems offers a fast way of communication and transfer of data between many processes. However, to allow for kernel synchronization, the shared memory operates with inter-process communication structures. The shared memory functionality on the other hand, provides a back up for the shared memory sections of the kernel. A distinctive feature of the shared memory functionality is its capability to retain memory of their contents even when there are no processes changing their memory into virtual memory. The basis of the Linux file system is UNIX semantics. The kernel manages the various file systems using an abstraction layer called the virtual file system (VFS), which is composed of two components: a set of micro-systems that define how the object file appears like and a node object, which represent individual file. The file system object consists of the entire file system alongside a software layer for controlling the individual file objects. Linux Operating System and Security Linux operating system uses two techniques to protect the kernel and promote the security of the stored files and information. Firstly, normally, the kernel code unlike the other OS systems is not reversible. If an interruption occurs during the execution of a module process, the need_resched alert is set to allow the scheduler to continue running once the control returns to the user mode. Secondly, the kernel through the processor’s control hardware disables other interruptions in critical sections of the kernel, an action that guarantees progress of interrupt service routines without making the shared data resources to be accessible by other users. Read the full article

0 notes

Text

# Hướng dẫn thay đổi mật khẩu CloudPanel và mật khẩu root Cloud Server trên KVM #CloudPanel #KVM #RootPassword #ServerSecurity #VPS

# Hướng dẫn thay đổi mật khẩu CloudPanel và mật khẩu root Cloud Server trên KVM #CloudPanel #KVM #RootPassword #ServerSecurity #VPS I. Công nghệ ảo hóa KVM là gì? KVM (Kernel-based Virtual Machine) là một giải pháp ảo hóa mạnh mẽ, được tích hợp trực tiếp vào nhân Linux. Công nghệ này cho phép một máy chủ vật lý chạy nhiều máy ảo (VM) độc lập, mỗi VM có thể cài đặt hệ điều hành riêng, ví dụ như…

View On WordPress

0 notes

Text

Cloud Computing Solutions: Which Private Cloud Platform is Right for You?

If you’ve been navigating the world of IT or digital infrastructure, chances are you’ve come across the term cloud computing solutions more than once. From running websites and apps to storing sensitive data — everything is shifting to the cloud. But with so many options out there, how do you know which one fits your business needs best?

Let’s talk about it — especially if you're considering private or hybrid cloud setups.

Whether you’re an enterprise looking for better performance or a growing business wanting more control over your infrastructure, private cloud hosting might be your perfect match. In this post, we’ll break down some of the most powerful platforms out there, including VMware Cloud Hosting, Nutanix, H

yper-V, Proxmox, KVM, OpenStack, and OpenShift Private Cloud Hosting.

First Things First: What Are Cloud Computing Solutions?

In simple terms, cloud computing solutions provide you with access to computing resources like servers, storage, and software — but instead of managing physical hardware, you rent them virtually, usually on a pay-as-you-go model.

There are three main types of cloud environments:

Public Cloud – Shared resources with others (like Google Cloud or AWS)

Private Cloud – Resources are dedicated just to you

Hybrid Cloud – A mix of both, giving you flexibility

Private cloud platforms offer a high level of control, customization, and security — ideal for industries where uptime and data privacy are critical.

Let’s Dive Into the Top Private Cloud Hosting Platforms

1. VMware Cloud Hosting

VMware is a veteran in the cloud space. It allows you to replicate your on-premise data center environment in the cloud, so there’s no need to learn new tools. If you already use tools like vSphere or vSAN, VMware Cloud Hosting is a natural fit.

It’s highly scalable and secure — a great choice for businesses of any size that want cloud flexibility without completely overhauling their systems.

2. Nutanix Private Cloud Hosting

If you're looking for simplicity and power packed together, Nutanix Private Cloud Hosting might just be your best friend. Nutanix shines when it comes to user-friendly dashboards, automation, and managing hybrid environments. It's ideal for teams who want performance without spending hours managing infrastructure.

3. Hyper-V Private Cloud Hosting

For businesses using a lot of Microsoft products, Hyper-V Private Cloud Hosting makes perfect sense. Built by Microsoft, Hyper-V integrates smoothly with Windows Server and Microsoft System Center, making virtualization easy and reliable.

It's a go-to for companies already in the Microsoft ecosystem who want private cloud flexibility without leaving their comfort zone.

4. Proxmox Private Cloud Hosting

If you’re someone who appreciates open-source platforms, Proxmox Private Cloud Hosting might be right up your alley. It combines KVM virtualization and Linux containers (LXC) in one neat package.

Proxmox is lightweight, secure, and customizable. Plus, its web-based dashboard is super intuitive — making it a favorite among IT admins and developers alike.

5. KVM Private Cloud Hosting

KVM (Kernel-based Virtual Machine) is another open-source option that’s fast, reliable, and secure. It’s built into Linux, so if you’re already in the Linux world, it integrates seamlessly.

KVM Private Cloud Hosting is perfect for businesses that want a lightweight, customizable, and high-performing virtualization environment.

6. OpenStack Private Cloud Hosting

Need full control and want to scale massively? OpenStack Private Cloud Hosting is worth a look. It’s open-source, flexible, and designed for large-scale environments.

OpenStack works great for telecom, research institutions, or any organization that needs a lot of flexibility and power across private or public cloud deployments.

7. OpenShift Private Cloud Hosting

If you're building and deploying apps in containers, OpenShift Private Cloud Hosting In serverbasket is a dream come true. Developed by Red Hat, it's built on Kubernetes and focuses on DevOps, automation, and rapid application development.

It’s ideal for teams running CI/CD pipelines, microservices, or containerized workloads — especially when consistency and speed are top priorities.

So, Which One Should You Choose?

The right private cloud hosting solution really depends on your business needs. Here’s a quick cheat sheet:

Go for VMware if you want enterprise-grade features with familiar tools.

Try Nutanix if you want something powerful but easy to manage.

Hyper-V is perfect if you’re already using Microsoft tech.

Proxmox and KVM are great for tech-savvy teams that love open source.

OpenStack is ideal for large-scale, customizable deployments.

OpenShift is built for developers who live in the container world.

Final Thoughts

Cloud computing isn’t a one-size-fits-all solution. But with platforms like VMware, Nutanix, Hyper-V, Proxmox, KVM, OpenStack, and OpenShift Private Cloud Hosting, you’ve got options that can scale with you — whether you're running a small development team or a global enterprise.

Choosing the right platform means looking at your current infrastructure, your team's expertise, and where you want to be a year from now. Whatever your path, the right cloud solution can drive efficiency, reduce overhead, and set your business up for long-term success.

#Top Cloud Computing Solutions#Nutanix Private Cloud#VMware Cloud Server Hosting#Proxmox Private Cloud#KVM Private Cloud

1 note

·

View note

Text

Hyper-V vs. andere Hypervisoren

Vergleich mit anderen Hypervisoren: VMware, VirtualBox und Co.

March 30, 2025

Warum ist diese Integration so wichtig? Nun, stellt euch vor, ihr müsstet ein fremdes Betriebssystem auf eurem Windows-Rechner laufen lassen. Da gibt es oft Kompatibilitätsprobleme, Treiberkonflikte und andere Kopfschmerzen. Hyper-V hingegen ist wie ein Handschuh, der perfekt passt.

Leistung, die überzeugt

Hyper-V ist bekannt für seine hohe Leistung. Es nutzt die Hardware effizient und bietet eine schnelle und stabile Umgebung für eure VMs. Das ist wie ein Sportwagen, der auf der Autobahn richtig Gas gibt.

Sicherheit an erster Stelle

Sicherheit ist ein riesiges Thema, oder? Hyper-V bietet fortschrittliche Sicherheitsfunktionen, die eure VMs vor Bedrohungen schützen. Das ist wie ein Hochsicherheitstrakt für eure Daten.

Live-Migration: Flexibilität pur

Stellt euch vor, ihr müsst einen Server warten, aber ihr wollt eure VMs nicht herunterfahren. Mit der Live-Migration könnt ihr VMs von einem Server auf einen anderen verschieben, ohne dass es zu Ausfallzeiten kommt. Das ist wie ein fliegender Wechsel in einem Autorennen.

Speicher- und Netzwerkfunktionen

Hyper-V bietet umfangreiche Speicher- und Netzwerkfunktionen, die es euch ermöglichen, eure VMs flexibel zu verwalten. Ihr könnt virtuelle Festplatten erstellen, Netzwerke konfigurieren und vieles mehr. Das ist wie ein Werkzeugkasten mit unendlich vielen Werkzeugen.

Die Kostenfrage: Oft im Lieferumfang enthalten

Ein weiterer Vorteil von Hyper-V ist, dass es oft im Lieferumfang von Windows Server enthalten ist. Das bedeutet, ihr müsst nicht extra für eine teure Software bezahlen. Das ist wie ein kostenloses Upgrade, das euch viel Geld spart.

Vergleich mit anderen Hypervisoren: VMware, VirtualBox und Co.

Natürlich gibt es auch andere Hypervisoren wie VMware und VirtualBox. VMware ist bekannt für seine Enterprise-Lösungen, während VirtualBox eine beliebte Wahl für Entwickler und Privatnutzer ist. Aber wie schlagen sie sich im Vergleich zu Hyper-V?

VMware: Der Platzhirsch im Enterprise-Bereich

VMware ist zweifellos ein starker Konkurrent. Es bietet viele fortschrittliche Funktionen und ist in großen Unternehmen weit verbreitet. Aber es ist oft teurer und komplexer als Hyper-V.

VirtualBox: Der Allrounder für Entwickler

VirtualBox ist eine gute Wahl für Entwickler und Privatnutzer. Es ist kostenlos und einfach zu bedienen. Aber es bietet nicht die gleiche Leistung und Skalierbarkeit wie Hyper-V 2025.

KVM: Die Open-Source-Alternative

KVM (Kernel-based Virtual Machine) ist eine weitere beliebte Open-Source-Alternative. Es ist flexibel und leistungsstark, aber es erfordert oft mehr technisches Know-how als Hyper-V.

Die Entscheidung: Warum Hyper-V oft die Nase vorn hat

Also, warum wählen so viele Leute Hyper-V für ihre Windows-Umgebungen? Die Antwort ist einfach: Es ist die perfekte Kombination aus Leistung, Sicherheit, Integration und Kosten. Es ist wie ein Schweizer Taschenmesser, das alles kann.

Hyper-V und die Cloud: Azure Integration

Hyper-V spielt auch eine wichtige Rolle in der Cloud. Die nahtlose Integration mit Microsoft Azure ermöglicht es euch, eure VMs einfach in die Cloud zu verschieben. Das ist wie ein Aufzug, der euch direkt in den Himmel bringt.

Die Zukunft von Hyper-V: Innovationen und Updates

Microsoft arbeitet ständig daran, Hyper-V zu verbessern. Neue Funktionen und Updates werden regelmäßig veröffentlicht, um sicherzustellen, dass es immer auf dem neuesten Stand ist. Das ist wie ein Auto, das jedes Jahr ein neues Modell bekommt.

Hyper-V für Entwickler: Eine Goldgrube

Für Entwickler ist Hyper-V eine Goldgrube. Es ermöglicht ihnen, verschiedene Umgebungen zu testen und zu entwickeln, ohne ihre Hauptsysteme zu gefährden. Das ist wie ein Spielplatz für Programmierer.

Hyper-V im Rechenzentrum: Skalierbarkeit und Effizienz

In Rechenzentren ist Skalierbarkeit und Effizienz entscheidend. Hyper-V bietet die nötigen Werkzeuge, um große Mengen an VMs zu verwalten und die Ressourcen optimal zu nutzen. Das ist wie ein gut geöltes Uhrwerk.

Hyper-V und die Community: Unterstützung und Ressourcen

Es gibt eine große Community von Hyper-V-Nutzern, die sich gegenseitig unterstützen und Ressourcen teilen. Das ist wie eine riesige Familie, die zusammenhält.

Hyper-V: Ein Fazit

Also, was ist das Fazit? Hyper-V ist eine leistungsstarke und vielseitige Lösung, die perfekt auf Windows-Umgebungen abgestimmt ist. Es bietet hohe Leistung, Sicherheit und Flexibilität, und das oft zu einem günstigen Preis. Wenn ihr also nach einem Hypervisor für eure Windows-Systeme sucht, solltet ihr Hyper-V definitiv in Betracht ziehen. Es ist wie ein treuer Begleiter, der euch nie im Stich lässt.

mit Network4you (systemhaus münchen) nicht das Beste aus beiden Welten haben können? Beim Management der Risiken des Geschäfts in einer digital orientierten Gesellschaft. Lassen Sie uns Ihrer Informationstechnologie das geben, was sie für Gesundheit und Wachstum braucht.

Hyper-V vs. andere Hypervisoren

0 notes

Text

In the recent times, when we hear about software containerization platforms, we first hear the name Docker for sure. It is the world’s leading software containerization platform.Software containerization is a virtualization at server level where the kernel of an operating system allows the existence of multiple virtualized environments. Such instances are termed as software containers.The automation of application deployment into the software containers is achieved through docker. The application deployed through docker is light-weight, portable and self-sufficient and can run anywhere virtually.About DockerDocker was introduced as an open source project by Dot Cloud in 2013. This project became extremely popular to such an extent that Dot Cloud altered its name to Docker Inc. The uniqueness of docker project is that it offers advanced tools which work together and are built on top of Linux Kernel features.The primary goal is to help developers and system administrators create, deploy and run an application with all the parts it needs, such as dependencies, and get them running across any Linux machine irrespective of any of the customized settings that machine might be having and that could vary from the machine utilized for testing as well as write the code.An additional advantage of docker is that it is an open source software. It means that anyone can enhance the features of docker if they feel they can add any additional features to the existing software.A Linux application of any language or framework can run inside a Docker container. There are two ways to get applications into containers. They are manual builds and Dockerfile.Manual buildsA manual build starts by launching a container with a base OS image. Applications and dependencies can be installed using the package manager offer by the flavor of Linux which is chosen. When installation of the application gets completed, it can be pushed to a registry.DockerfileDockerfile is a script consisting of various instructions and arguments listed in it to automatically perform actions on a base image to create a new one.Key BenefitsFor an infrastructure agnostic CaaS model, Docker offers an integrated suite of capabilities. The IT Operations teams can manage, provision, and secure both base application content and infrastructure resources while developers can develop and deploy their applications in a self-service manner. The following are the key benefits of Docker.Open SourceOne of the key aspects of Docker is that it is an entirely open source. This implies anyone can provide a contribution to the platform as well as adapt and extend it in order to meet their requirements if they need additional features that do not come with Docker. This makes docker an extremely convenient option for system administrators and developers.Low-OverheadAs the developers need not provide a virtualized environment down to hardware level, they can cut down the overhead costs by generating only the essential OS components and libraries that make it run.AgileDocker is developed with simplicity and speed in mind and is one of the reasons why it became so popular. Developers can simply package up any kind of software and its associated dependencies into a container. They can utilize any tooling, version, and language as they are together packaged into a container that standardizes every element without having the need to sacrifice anything.PortableDocker makes the application containers entirely portable in a completely new way. Developers can now ship applications to production and testing from development without breaking the code. The environmental differences won’t be having any effect on the packaged information inside the container. Also, for an application to operate in production, there is no need to change it. This is now great for teams related to IT operations as they can prevent vendor lock-in by moving applications across the data centers.ControlDocker offers high control over the applications because they move along the life cycle as the environment is standardized.

This makes it easier to answer questions about scale, manageability, and security during the process. IT teams can customize the level of flexibility and control required to keep regulatory and performance compliance, and service levels in line for specific subjects.Who can use Docker?Docker is used extensively by both developers and system administrators making it an important part of DevOps. Developers can now write the code without worrying about the system on which the code runs. Because of docker’s small footprint and lower overhead, it gives flexibility and decreases the number of systems needed for operations staff.Reason for Docker’s crazeThe main reason for docker’s craze is its lightweight nature combined with the workflow.It is fast, easy to use and developer-centric tool. It is used extensively in DevOps projects.Docker easily packs and ships the code. It makes the code as portable as possible and makes that portability user-friendly and simple.Docker is virtualization at the operating system level. Dissimilar to hypervisor virtualization in which virtual machines operate on physical hardware through hypervisors, containers instead run user space on operating system’s kernel. This makes them very light-weight and very fast.Future of DockerBig names in IT industry like Google, IBM, Red Hat are investing in docker project. It has been 3 years since the Docker project has started and it is showing steady growth due to its extensive use in DevOps projects. In 2015, docker and some other companies announced that they are working on OS independent standard for software containers.ConclusionDocker is already changing the way how applications get deployed into the software containers and how they run in different environments. It is starting to reshape the way we think about software containerization. Job prospects for professionals with expertise in docker have increased up to 1000 percent. Among the most sought-after IT skills, docker occupies second place and the salaries for docker professionals have risen by 28%. This ultimately determines the craze for docker in the IT industry. Anyone who works with code or servers can take the docker course for a brighter career.

0 notes

Text

RHEL 8.8: A Powerful and Secure Enterprise Linux Solution

Red Hat Enterprise Linux (RHEL) 8.8 is an advanced and stable operating system designed for modern enterprise environments. It builds upon the strengths of its predecessors, offering improved security, performance, and flexibility for businesses that rely on Linux-based infrastructure. With seamless integration into cloud and hybrid computing environments, RHEL 8.8 provides enterprises with the reliability they need for mission-critical workloads.

One of the key enhancements in RHEL 8.8 is its optimized performance across different hardware architectures. The Linux kernel has been further refined to support the latest processors, storage technologies, and networking hardware. These RHEL 8.8 improvements result in reduced system latency, faster processing speeds, and better efficiency for demanding applications.

Security remains a top priority in RHEL 8.8. This release includes enhanced cryptographic policies and supports the latest security standards, including OpenSSL 3.0 and TLS 1.3. Additionally, SELinux (Security-Enhanced Linux) is further improved to enforce mandatory access controls, preventing unauthorized modifications and ensuring that system integrity is maintained. These security features make RHEL 8.8 a strong choice for organizations that prioritize data protection.

RHEL 8.8 continues to enhance package management with DNF (Dandified YUM), a more efficient and secure package manager that simplifies software installation, updates, and dependency management. Application Streams allow multiple versions of software packages to coexist on a single system, giving developers and administrators the flexibility to choose the best software versions for their needs.

The growing importance of containerization is reflected in RHEL 8.8’s strong support for containerized applications. Podman, Buildah, and Skopeo are included, allowing businesses to deploy and manage containers securely without requiring a traditional container runtime. Podman’s rootless container support further strengthens security by reducing the risks associated with privileged container execution.

Virtualization capabilities in RHEL 8.8 have also been refined. The integration of Kernel-based Virtual Machine (KVM) and QEMU ensures that enterprises can efficiently deploy and manage virtualized workloads. The Cockpit web interface provides an intuitive dashboard for administrators to monitor and control virtual machines, making virtualization management more accessible.

For businesses operating in cloud environments, RHEL 8.8 seamlessly integrates with leading cloud platforms, including AWS, Azure, and Google Cloud. Optimized RHEL images ensure smooth deployments, reducing compatibility issues and providing a consistent operating experience across hybrid and multi-cloud infrastructures.

Networking improvements in RHEL 8.8 further enhance system performance and reliability. The updated NetworkManager simplifies network configuration, while enhancements to IPv6 and high-speed networking interfaces ensure that businesses can handle increased data traffic with minimal latency.

Storage management in RHEL 8.8 is more robust, with support for Stratis, an advanced storage management solution that simplifies volume creation and maintenance. Enterprises can take advantage of XFS, EXT4, and LVM (Logical Volume Manager) for scalable and flexible storage solutions. Disk encryption and snapshot management improvements further protect sensitive business data.

Automation is a core focus of RHEL 8.8, with built-in support for Ansible, allowing IT teams to automate configurations, software deployments, and system updates. This reduces manual workload, minimizes errors, and improves system efficiency, making enterprise IT management more streamlined.

Monitoring and diagnostics tools in RHEL 8.8 are also improved. Performance Co-Pilot (PCP) and Tuned provide administrators with real-time insights into system performance, enabling them to identify bottlenecks and optimize configurations for maximum efficiency.

Developers benefit from RHEL 8.8’s comprehensive development environment, which includes programming languages such as Python 3, Node.js, Golang, and Ruby. The latest version of the GCC (GNU Compiler Collection) ensures compatibility with a wide range of applications and frameworks. Additionally, enhancements to the Web Console provide a more user-friendly administrative experience.

One of the standout features of RHEL 8.8 is its long-term support and enterprise-grade lifecycle management. Red Hat provides extended security updates, regular patches, and dedicated technical support, ensuring that businesses can maintain a stable and secure operating environment for years to come. Red Hat Insights, a predictive analytics tool, helps organizations proactively detect and resolve system issues before they cause disruptions.

In conclusion RHEL 8.8 is a powerful, secure, and reliable Linux distribution tailored for enterprise needs. Its improvements in security, containerization, cloud integration, automation, and performance monitoring make it a top choice for businesses that require a stable and efficient operating system. Whether deployed on physical servers, virtual machines, or cloud environments, RHEL 8.8 delivers the performance, security, and flexibility that modern enterprises demand.

0 notes

Text

The Transformative Power of Containers in Cloud Computing

Cloud computing has changed how companies deploy and manage their applications. Due to increasing demands for flexible, reliable, and portable solutions, containers are emerging as an essential factor in cloud-based environments. Containers allow developers to create, deploy, and test applications across multiple platforms with high performance and low failure times.

Ready to master cloud technology? A cloud computing course in Bangalore is your gateway to understanding the practical significance of containerization and its impact on modern cloud strategies. This is the essence of how containers have revolutionized cloud computing and solidified their role in today's digital landscape.

What Are Containers?

Containers are light, standalone executable software programs that include all the components needed for running an application - code running time, runtime tools software, tools for system administration libraries, dependencies, and other tools. In contrast to virtual machines (VMs), which need an operating system that is complete, containers can share the host OS kernel. This makes containers much more efficient in terms of performance and usage of resources.

Key Features of Containers

Transferability: The application runs consistently in various settings, from the developer's laptop or desktop to cloud servers.

Scalability: Containers can be quickly scaled down or up, making them perfect for cloud-based applications.

Efficiency: They consume fewer resources than virtual machines, which reduces the cost of infrastructure.

Isolation: The Isolation container works in its own way, which helps avoid any conflicts between different applications.

The Importance of Containers in Cloud Computing

Containers are not just a trend, they are a necessity in modern cloud computing environments. They enable companies to launch applications swiftly and efficiently. Cloud-based companies are increasingly our professionals who are well-versed in containerization, making a cloud computing course in Bangalore a strategic choice for enhancing your skills.

1. Improved Application Deployment

Traditional deployment techniques include installing software on multiple computers, which causes compatibility problems. Containers solve this issue by bundling software with dependencies to ensure a stable running environment.

In particular, Netflix uses containerised microservices to manage its vast streaming platform effectively. This lets Netflix change its services in isolation without impacting the system in general.

2. Enhanced DevOps and CI/CD

DevOps teams rely on Continuous Integration/Continuous Deployment (CI/CD) pipelines to deliver software updates rapidly. Containers speed up this process by enabling rapid testing and deployment.

Businesses such as Spotify and Uber make use of containers to automate updates, which reduces downtime while increasing user satisfaction. Professions who want to master DevOps methods can take advantage of the cloud computing certificate in Bangalore to gain a better understanding of container-driven automation.

3. Better Resource Utilization

Containers are light and use less power than Virtual Machines. Because they all share the identical OS kernel, many containers can run efficiently on the same machine.

Google, for instance, operates millions of containers every week using Kubernetes, an open-source container orchestration software. Kubernetes guarantees optimal resource allocation and reduces business operational expenses.

4. Multi-Cloud and Hybrid Cloud Compatibility

Companies often utilize several cloud providers to avoid being locked in by vendors' strategy, which is known as a multi-cloud strategy by containers, which can be seamless across various cloud providers.

In particular, banks that handle sensitive data typically deploy applications in hybrid cloud configurations that combine public and private cloud services. Containers help make this transition more manageable by maintaining consistency across all the different cloud settings.

Popular Container Tools in Cloud Computing

A variety of containerization software tools have become popular in cloud computing. These tools help businesses automate and manage their apps effectively. Anyone who wants to learn more about using these tools can attend a cloud computing course in Bangalore for practical instruction.

1. Docker

Docker is the most extensively used container platform. It simplifies application deployment and packaging, allowing developers to build lightweight and portable containers that function consistently across various environments.

2. Kubernetes

Kubernetes is orchestration software that automates container deployment, scaling, and management. It helps ensure that apps are always in operation and efficiently handle fluctuating demands.

3. OpenShift

OpenShift, created by Red Hat, is a Kubernetes-based platform for building, managing, and deploying applications. It is extensively used by companies seeking a secure container-based solution.

Real-Life Example: How Containers Power E-Commerce

E-commerce companies experience fluctuating traffic volumes, particularly during the holiday or sales seasons. Traditional monolithic applications might struggle to cope with rapid traffic increases, which can lead to lost revenue and downtime.

Businesses such as Amazon and Flipkart can scale each of their services separately using containers. For instance, the checkout process can be scaled independently from the merchandise search function to ensure a seamless shopping experience.

A cloud computing certificate in Bangalore is a comprehensive course that covers cloud deployment and containerization methods, which can benefit professionals who wish to enter highly sought-after industries.

Future of Containers in Cloud Computing

Containers are predicted to increase as companies look for more effective cloud solutions. As technological advances are made in AI and machine learning, containers are expected to play a crucial role in managing complex workloads within cloud computing.

Numerous companies are investing in education programs to increase their knowledge of containers and related technologies. If you're hoping to gain real-world experience while also advancing your career prospects, enrolling at the best cloud computing institute in Bangalore will provide you with an in-depth understanding of the management of containers.

Conclusion

Containers have changed the world of cloud computing. They provide a highly scalable, efficient, and flexible solution for deploying applications. Industries worldwide rely on containerized systems to improve resource utilization efficiency, enhance performance, and enhance security.

If you're looking to make employment within cloud computing, then gaining knowledge of containers is an absolute requirement. Participating in the cloud computing training course in Bangalore can equip individuals with the knowledge required for working with containers, Kubernetes, and various other cloud-related technologies. Furthermore, obtaining the cloud computing certificate in Bangalore could increase your credibility and give you access to new career possibilities.

Cloud computing is evolving quickly staying on top of the most recent technologies is essential. Selecting the most reputable cloud computing institute in Bangalore is a sure way to get industry-relevant information and hands-on experience in containerisation that will set you apart in the job marketplace.

0 notes